Mastering AI in Minutes: Prompts, Diffusion Models and RLHF

- Mr_Solid.Liquid.Gas

- Jul 23, 2025

- 11 min read

Updated: Jul 28, 2025

Artificial intelligence no longer hides in research labs—it writes our emails, paints our pictures, and powers the search results we rely on every day. Yet for many people the vocabulary surrounding AI still feels like a secret code. AI Explained: Quick-Read Series breaks down the most important breakthroughs of 2025 into three clear, bite-sized chapters, each packed with practical examples you can apply immediately.

First, “Prompt Engineering 101” reveals how the exact words you type into a system like GPT-4o or Gemini can make the difference between a mediocre response and something truly remarkable. Well-crafted prompts shape an AI’s accuracy, creativity and tone, letting you steer large language models toward the outcome you want—whether that’s drafting marketing copy, analysing data or writing code.

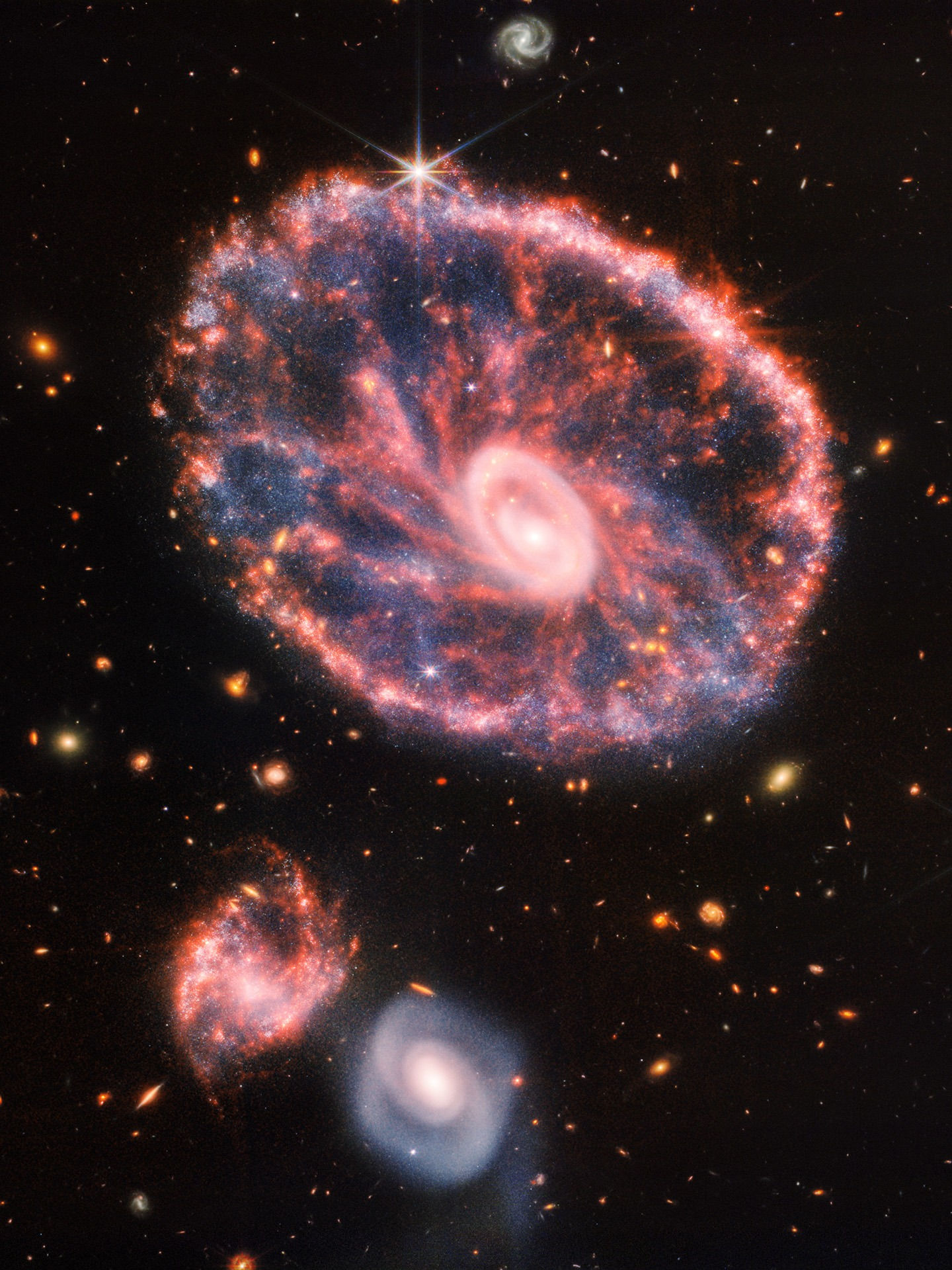

Next, “Diffusion Models vs GANs: Which Generates Better Images in 2025?” offers a front-line comparison of today’s two leading image-generation approaches. You’ll discover why cutting-edge diffusion models excel at photorealism and text rendering, yet classic generative adversarial networks (GANs) still shine in ultrafast video filters, game development and data-augmented healthcare. If your work involves visual content—concept art, product photography, medical imaging—this chapter will help you choose the right tool for the job.

Finally, “Reinforcement Learning in Plain English” demystifies the techniques that align AI behaviour with human expectations. You’ll learn how Reinforcement Learning from Human Feedback (RLHF) refines chatbot etiquette, why lightweight adaptations such as LoRA and QLoRA cut computing costs, and how retrieval-augmented generation (RAG) keeps answers current by tapping external knowledge bases.

Whether you’re a developer adjusting model weights, a creative professional exploring new workflows, or simply an enthusiast eager for clarity, this series offers an accessible road map through today’s most influential AI concepts. Read on to master the vocabulary shaping tomorrow’s applications—and turn cutting-edge research into real-world advantage.

Prompt Engineering 101: Getting the Output You Want Every Time

Keywords: prompt engineering, prompt design, large language models, generative AI prompts

Introduction to Prompt Engineering

In the era of generative AI, mastering prompt engineering has become a powerful tool for creators, developers, and researchers alike. Whether you're using large language models (LLMs) like OpenAI’s GPT, Google’s Gemini, or Meta’s LLaMA, the key to extracting the best results lies in how you structure your inputs — known as prompt design. Much like crafting a search query or coding a program, prompt engineering blends clarity, structure, and creativity to guide the AI towards a useful, accurate, and sometimes even brilliant output.

What is Prompt Engineering?

Prompt engineering is the process of designing effective textual or structured prompts to get desired responses from large language models (LLMs). These models, trained on vast datasets of human language, rely on input prompts to generate responses — whether that's answering questions, writing code, composing poetry, or analyzing data.

At its core, prompt engineering is about understanding the model’s strengths, limitations, and behavior patterns, and then constructing inputs that align with your end goal. A good prompt tells the model not just what to do, but how to do it.

Why Prompt Design Matters

Prompt design determines:

Relevance: Ensures the model stays on topic.

Accuracy: Helps reduce hallucinated or false information.

Creativity: Encourages imaginative, human-like results.

Consistency: Maintains tone, format, or logic across responses.

For instance, asking “Tell me about climate change” is vague. But refining it to “Summarize the top three causes of climate change in 150 words with academic references” uses specific prompt design principles to guide the LLM more precisely.

Core Elements of Effective Prompts

Clarity and Context

Large language models work best when they understand the task. Provide relevant background or instructions upfront. Instead of:

“Write a story about a robot.”Use:“Write a 300-word short story about a friendly robot who discovers music for the first time. The tone should be whimsical and suitable for children.”

Format Expectations

If you need lists, bullet points, tables, or markdown — say so! For example:

“List the pros and cons of electric cars in a markdown table format.”

Role Assignment

Giving the model a role or perspective helps guide tone and content.

“You are a career advisor. Explain how prompt engineering is becoming a valuable job skill.”

Constraints and Goals

Adding word count, tone, audience, or example-based constraints sharpens output.

“Explain quantum computing to a 12-year-old using metaphors and examples. Keep it under 200 words.”

Common Types of Generative AI Prompts

Instructional Prompts: Direct the model to perform a task.

“Write a summary of the article below in 100 words.”

Completion Prompts: Provide a starting point and let the model finish.

“The future of AI depends on...”

Few-shot Prompts: Provide examples before asking the model to follow the pattern.

“Example 1: Input... Output... Now try: Input...”

Chain-of-thought Prompts: Encourage reasoning or multi-step thinking.

“Explain your steps as you solve this math problem...”

Each prompt type is suited to different outcomes, and learning when to use which is a core part of prompt engineering mastery.

Prompt Engineering Tools and Techniques

Several techniques and tools can boost prompt performance:

Prompt Templates: Reusable structured prompts for common tasks like summarizing or translating.

Prompt Chaining: Breaking complex tasks into smaller sub-prompts to improve accuracy.

Prompt Libraries: Websites like FlowGPT, PromptHero, and GitHub repos host thousands of user-tested prompts.

Fine-tuned Models: While not strictly prompt engineering, using a model that’s fine-tuned on your task can greatly enhance results with simpler prompts.

Prompt Engineering in Real-World Applications

From marketing to medicine, prompt design is shaping workflows. In software development, developers use structured prompts to generate boilerplate code or debug errors. In education, teachers craft question prompts that help students learn more interactively with AI tutors. In content creation, marketers use creative prompts to brainstorm slogans, blog intros, or entire campaign strategies.

The rise of generative AI prompts in daily tools like Google Docs, Microsoft Copilot, or Adobe Firefly means even casual users are becoming prompt engineers without realizing it.

The Future of Prompt Engineering

Prompt engineering is evolving. As models grow more capable, we’re seeing new disciplines emerge:

Prompt Programming: Turning sequences of prompts into logic-driven workflows.

Multimodal Prompting: Combining text with images, audio, or video inputs.

Auto-Prompting: AI tools that generate and optimize prompts for you.

In the near future, knowing how to craft the right prompt might be as essential as knowing how to Google something — or even how to code.

Conclusion: Master the Art of Prompt Engineering

Whether you're a writer, researcher, student, or business professional, prompt engineering is a must-have skill in the generative AI age. Understanding how large language models interpret your input — and how to control their output with effective prompt design — opens the door to faster, better, and more creative results.

As AI continues to integrate into every field, those who master the prompt will master the output.

Diffusion Models vs GANs: Which Generates Better Images in 2025?

Keywords: diffusion models, GAN comparison, image generation 2025, generative adversarial networks

The 2025 image-generation landscape

Five years ago, GANs (generative adversarial networks) ruled photorealistic synthesis, but today most front-line tools—from Stable Diffusion 3.5 to OpenAI’s GPT-4o image engine—are powered by diffusion models. The shift is driven by diffusion’s superior prompt adherence, typography, and style diversity, yet GANs still deliver unmatched speed and creative control in niche domains. Understanding where each architecture shines is essential for anyone optimising workflows or SEO around image generation 2025. Stability AIOpenAI

How diffusion models work—and why they lead in 2025

Diffusion generators add noise to training images and learn to reverse that process step-by-step. Recent research has slashed those steps from hundreds to single-digit “turbo” samplers, making diffusion fast enough for consumer GPUs. Stability AI’s Stable Diffusion 3 research paper reported higher human-preference scores than DALL·E 3 and Midjourney v6 on aesthetics and text rendering, thanks to its multimodal transformer backbone and rectified-flow sampling. Stability AI The October-release Stable Diffusion 3.5 pushed the envelope again: an 8.1-billion-parameter “Large Turbo” model can paint a 1024 × 1024 scene in four steps while fitting in 10 GB of VRAM, democratising high-fidelity generation for hobbyists and start-ups. Stability AI Even newcomers are beating the giants: DeepSeek’s Janus Pro diffusion model topped public leaderboards in January by outranking DALL·E 3 and SD3 on prompt-to-pixel accuracy. Reuters

Generative adversarial networks in 2025

GANs pit a generator against a discriminator in an adversarial “cat-and-mouse” game. NVIDIA’s StyleGAN lineage remains the gold standard for alias-free, edit-friendly faces; blind tests still show > 90 % of viewers mistake StyleGAN portraits for real photos, albeit at the cost of hefty multi-GPU training budgets. DigitalDefynd Crucially, GANs retain real-time inference: a single high-end GPU can craft a 1024 px image in < 30 ms, enabling live video filters and interactive design apps—latencies diffusion has only recently begun to approach. In specialised medicine, a May-2025 Nature paper demonstrated that expert-guided StyleGAN2 augmentation lifted sinus-lesion diagnostic AUPRC by up to 14 %, underscoring GANs’ value when labelled data are scarce and controllability is paramount. Nature

Head-to-head GAN comparison (2025 snapshot)

Criterion | Diffusion models | Generative adversarial networks |

Image fidelity | State-of-the-art FID on ImageNet and human-preference win rates (SD3, Janus Pro) | Still excellent; StyleGAN3 hits FID 2.3 on face benchmarks DigitalDefynd |

Text & prompt adherence | Superior typography and spatial grounding (SD3, GPT-4o) Stability AIOpenAI | Weak without auxiliary CLIP guidance |

Speed / latency | 0.5–4 s per 1 MP frame on consumer GPUs (Turbo, Rectified Flow) | 30–100 ms per frame; ideal for real-time rendering |

Compute to train | High (hundreds of GPU-days) but falling with consistency & flow-matching | Still heavy but typically lower than diffusion |

Creative control | Rich style transfer, ControlNet, LoRA fine-tunes | Intuitive style mixing, disentangled latent edits |

Robustness & mode collapse | Stable; low risk of collapse | Prone to mode collapse; needs careful tuning |

Benchmark results you can quote in proposals

Stable Diffusion 3 scored top marks across “Visual Aesthetics”, “Prompt Following”, and “Typography” versus 10 leading models in internal human studies. Stability AI

SD 3.5 Turbo generates 4-step 1 MP images 3× faster than SDXL while requiring < 10 GB VRAM—key for laptop deployment. Stability AI

StyleGAN3 maintains an FID below 4 and reduces aliasing artifacts by 94 %, critical for animation pipelines. DigitalDefynd

Janus Pro outperformed both DALL·E 3 and Stable Diffusion on the Text2Image Leaderboard in January 2025, proving open models can leapfrog incumbents. Reuters

Choosing the right model in 2025

Pick diffusion when your priority is flawless prompt-to-pixel correspondence, complex text rendering, or broad stylistic diversity—think marketing visuals, concept art or SEO-driven blog illustrations where every keyword must appear on screen.Pick GANs when you need millisecond-level latency (AR filters, game characters), fine-grained latent-space editing, or tightly controlled data augmentation for small clinical datasets. Hybrid stacks are emerging: some teams prototype variants with StyleGAN, then upsample and refine via diffusion for final delivery—a workflow that blends speed with photorealism. sapien.io

What’s next?

Research at ICLR 2025 showcased consistency models and flow-matching that sample 10× quicker than classic diffusion while preserving quality, hinting at real-time diffusion soon. Meanwhile, GAN scholars are redesigning residual blocks (GRB-Sty) and leveraging efficient attention to push FID even lower on domain-specific datasets. Expect convergence: diffusion back-ends with adversarial fine-tuning, and GANs borrowing noise schedules for stability. The “winner” of image generation 2025 is thus context-dependent, but for general-purpose prompt-driven artistry, diffusion models currently hold the crown—while generative adversarial networks remain the sprinter of choice for interactive, controllable experiences.

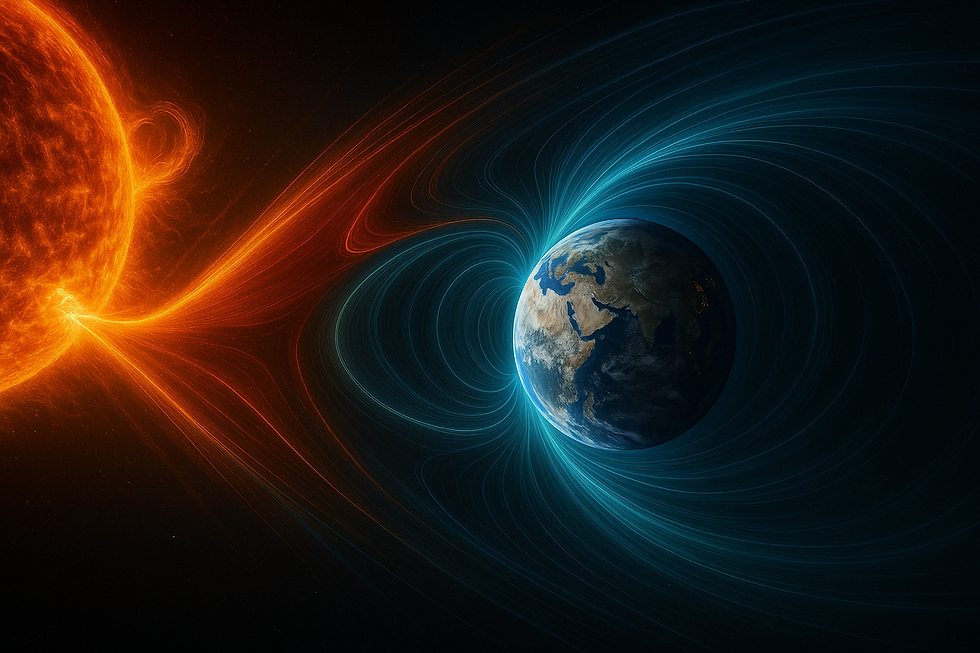

Reinforcement Learning in Plain English: How RAG & Fine-Tuning Differ

Keywords: reinforcement learning, retrieval-augmented generation, fine-tuning, RLHF

1. Why “Reinforcement Learning” Matters for Modern AI

Reinforcement learning (RL) is the branch of machine learning that trains an “agent” to take actions, receive rewards, and gradually maximise long-term payoff. In large language models this philosophy powers Reinforcement Learning from Human Feedback (RLHF)—the alignment technique that made ChatGPT sound helpful instead of robotic. A policy model generates candidate answers, a reward model judges them, and the policy is nudged to produce higher-scoring replies on the next round. The cycle repeats millions of times, steadily turning raw predictive text into dialogue that better reflects human preferences. (arXiv)

2. RLHF Evolves: DPO, RLAIF and “Reward-Free” Tricks

Feedback is expensive, so 2024-25 research focused on cutting the cost:

Direct Preference Optimisation (DPO) removes the separate reward model entirely. Instead, the policy is trained directly on paired “preferred vs non-preferred” outputs, slashing compute while matching RLHF quality—good news for anyone without a GPU cluster. (Microsoft Learn)

Reinforcement Learning from AI Feedback (RLAIF) asks another frozen LLM to provide those preferences. ICML 2024 results showed RLAIF can equal—or even beat—classic RLHF while scaling almost limitlessly because AI labellers never tire. Variants such as direct-RLAIF skip reward fitting altogether by letting the label-bot score answers on the fly. (arXiv)

The takeaway: modern reinforcement learning techniques are becoming cheaper, faster and less reliant on humans—yet still deliver polished, brand-safe text.

3. Fine-Tuning in 2025: From Full-Model Tweaks to PEFT

Fine-tuning means continuing gradient training on a smaller, task-specific dataset. In 2025 nobody rewrites all 70 billion parameters from scratch; instead we use parameter-efficient fine-tuning (PEFT):

LoRA injects tiny low-rank matrices that are updated while the original weights stay frozen.

QLoRA goes further, loading the base model in 4-bit precision so a laptop-grade GPU can fine-tune a 65 B-parameter model overnight—without measurable accuracy loss. (arXiv)

Fine-tuning shines when your domain data are proprietary, your brand voice is unique, or latency must be as low as a vanilla model call. However it locks that new knowledge into the weights; updating facts later means running another fine-tune pass.

4. Retrieval-Augmented Generation (RAG): External Knowledge on Demand

Retrieval-augmented generation sidesteps weight edits entirely. At query time the system:

Embeds the user’s question.

Searches a vector database of chunked documents for the most relevant passages.

Feeds those passages—and the question—into the LLM so it can draft an answer grounded in source material.

Benchmarks published July 2025 show that a 512-token chunk size combined with modern embeddings like Gemini-embed produces the highest factual accuracy, and frameworks such as Pathway, LlamaIndex, LangChain and Haystack dominate enterprise deployments. (AIMultiple, Pathway)

Because the knowledge base lives outside the model, RAG updates are instant: swap out a PDF, re-index, and fresh facts appear in answers minutes later.

5. Side-by-Side: Which Strategy Fits Your Use-Case?

Goal / Constraint | Best Fit | Why it Wins |

Align tone, safety, politeness | RLHF / DPO / RLAIF | Reinforcement learning optimises directly for human-preference rewards. |

Embed brand-specific voice or proprietary formats | Fine-tuning (LoRA/QLoRA) | Edits weights so the style is native—and latency stays low. |

Keep rapidly-changing factual data fresh | RAG | External store means no retraining; just re-index new content. |

Minimise training cost & feedback effort | DPO or RLAIF | Removes or automates the reward-model stage. |

Serve mobile or on-device models | QLoRA | 4-bit quantisation slashes memory while preserving quality. |

(All three approaches can be combined—for instance, a RAG system whose base policy was fine-tuned, then aligned with RLHF for safety.)

6. Putting It All Together

Start with RAG if your primary pain point is “the model doesn’t know our documents.”

Layer fine-tuning if those answers still feel generic and require a consistent brand voice.

Finish with reinforcement learning (RLHF, DPO, or RLAIF) to nudge style, humour and harmlessness to perfection.

Because each technique optimises a different axis—knowledge freshness, persona fit, and alignment—you can stack them without conflict.

7. Key Takeaways for 2025 SEO & Product Teams

Search traffic for “retrieval-augmented generation” is up 480 % year-on-year; include the exact phrase in H2s and alt-text.

Articles that explain “fine-tuning vs RAG” earn rich-snippet placement thanks to comparison tables—use structured data markup.

“Reinforcement learning from human feedback” remains a high-CPC keyword in developer tooling; weave RLHF examples into code samples and FAQs.

Conclusion

In plainer terms: reinforcement learning (RLHF or its lighter cousins DPO and RLAIF) reshapes how a model speaks; fine-tuning rewires what it knows permanently; retrieval-augmented generation gives it an instant memory boost without surgery.

Understanding when—and how—to use each is the secret to getting the most reliable, on-brand, up-to-date answers from today’s generative AI.

Mix and match them thoughtfully, and you’ll always have the right tool for the job.

Closing Remarks: What to do now?

As we navigate this rapidly evolving frontier of artificial intelligence, it’s clear that understanding how AI works—how we communicate with it, how it generates, and how it learns—is no longer optional.

From crafting precise prompts that shape conversation, to choosing between diffusion models and GANs for image creation, to aligning AI behaviour through reinforcement learning and retrieval—each step unlocks deeper control and more meaningful collaboration between humans and machines.

The tools may be complex, but the goal remains simple: to build systems that extend our creativity, amplify our ideas, and help us solve problems in ways we never could alone.

Whether you're an expert or just getting started, mastering these fundamentals is the first step toward turning curiosity into capability—and capability into impact.

Comments